In January 2020, the United Kingdom’s Information Commissioner’s Office (ICO) released a Code of Practice to protect children’s privacy online. The code isn’t the first piece of legislation that specifically seeks to limit how organizations handle the data of minors, but it is significantly broader than previous laws. Among other things, the ICO’s code demands, “The best interests of the child should be a primary consideration when you design and develop online services likely to be accessed by a child.”

The UK’s new rule — and the data privacy scandals that brought it into being — should grab the attention of the C-suite at every company that counts children among its users. This law and others like it demand a shift in both the technical measures and the philosophy with which businesses handle the data of minors.

As Elizabeth Denham, the UK’s information commissioner, has argued: “There are laws to protect children in the real world — film ratings, car seats, age restrictions on drinking and smoking. We need our laws to protect children in the digital world too.”

Why Children’s Data Privacy Is a Hot-Button Issue in 2020

Concerns about the safety of children online are as old as the internet itself, but the increasing power of big data has brought those concerns back to the forefront. And while adults can make educated choices about their own privacy, many are uncomfortable with recent news stories of children being monitored and targeted by corporations and potentially exposed to hackers.

Youtube Caught With Its Hand in the Cookie Jar

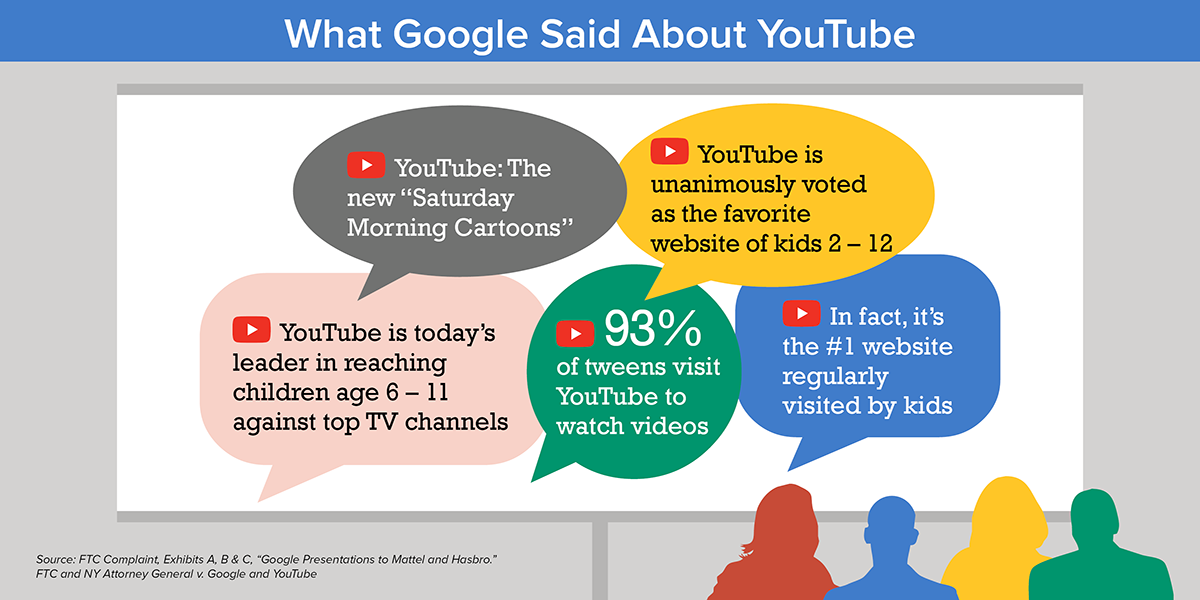

In September 2019, YouTube reached a $170 million settlement with the FTC for allegedly breaking the Children’s Online Privacy Protection Act (COPPA) Rule (more on that below). YouTube was accused of using cookies to collect personal information from viewers of kid-targeted channels and using the cookies to deliver targeted ads without parental consent. The FTC noted that YouTube publicly bragged about its popularity with kids and made millions of dollars marketing to them and therefore could not claim ignorance about its legal obligations under COPPA.

The Dress-Up App That Toyed With Data

In April 2019, the FTC issued another COPPA fine to i-Dressup, an app that offered games and virtual dress-up features to children. That company let kids fill out registration forms, sent a link to their parents for approval, but then failed to delete the children’s information even if parents never gave their consent. The company also failed to implement adequate security to protect data — they transmitted passwords in plain text and failed to monitor for security incidents, for example — and, as a result, was infiltrated by a hacker who accessed information about millions of users, 245,000 of whom had reported that they were under 13.

Ring Camera’s Vulnerabilities Led to a Privacy Nightmare

Arguably the biggest recent child privacy story came in December 2019, when several owners of Ring security cameras reported that their cameras had been hacked. In one particularly troubling incident, a young girl in Mississippi was terrified when an unknown man calling himself Santa Claus started speaking to her through the camera’s speakers.

Ring (which is owned by Amazon) asserted that their system had not been hacked, and that these users were the victim of a credential-stuffing attack. Amazon reminded users to use multifactor authentication and unique username/password combinations when registering new devices. That’s certainly good advice, but the idea of putting cameras that are vulnerable to intrusion in their children’s bedrooms still left many customers uneasy.

The UK’s Law Demands That Children’s Privacy Be Built Into Design

The UK’s ICO developed its code in the wake of these stories and in the midst of growing public understanding of personal data as a precious resource. The UK’s code — its full name is the Age Appropriate Design Code — establishes 15 standards to protect children’s privacy online.

The Requirements of the Age Appropriate Design Code

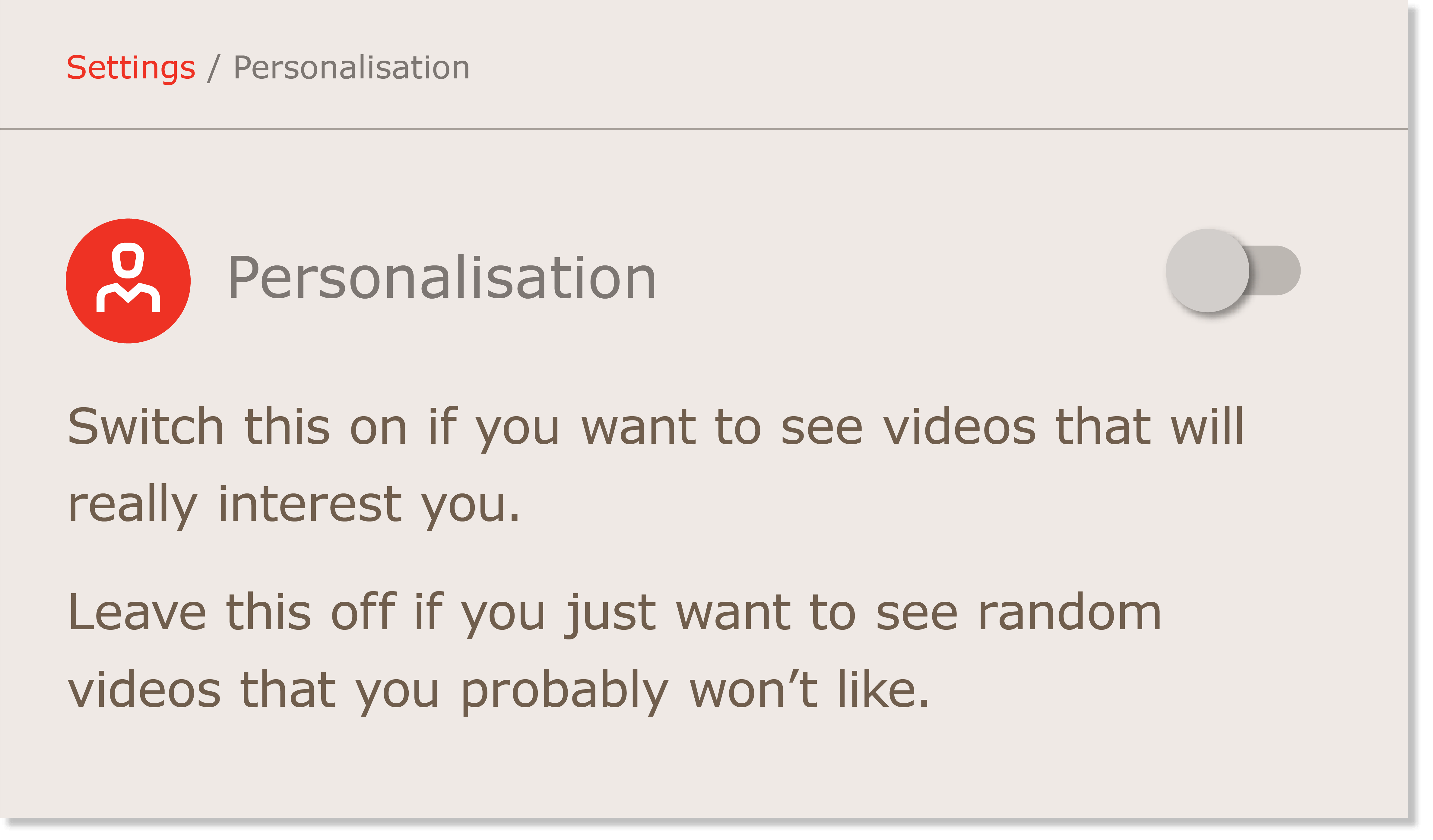

The code applies to organizations that design, develop, or provide online services “likely to be accessed by children,” such as apps, streaming services, social media, games, and internet-connected toys. It requires that privacy settings should automatically default to high when a child downloads an app or visits a site. Geolocation services should automatically be turned off for children, data collection and sharing should be minimal, and profiling that is used to deliver targeted ads should also be disabled by default.

In addition to stipulating a high baseline for privacy settings, the ICO’s code forbids using “nudge techniques” to convince children to weaken their privacy. Nudge techniques are tools for leading users to behave as the designer would prefer, typically by making the alternative unappealing, cumbersome, or easy to miss.

The ICO’s code is unique for its emphasis on the “best interests of the child.” Organizations that use the personal data of minors are encouraged to consider how they can:

Keep children safe from exploitation (whether commercial or sexual),

Protect health and well-being (physical, psychological, and emotional),

Support parents in protecting the best interests of children, and

Recognize children’s ability to form their own views as they develop and “give due weight to” those views.

How Businesses Are Responding to the Code

The code still needs to be officially approved by Parliament, but assuming it passes, organizations will have a year to update their policies before the rules take effect, most likely in fall 2021.

While the ICO has stated its commitment to helping organizations comply with the new rules, they have also made it clear that they intend to rigorously enforce the law. This may include reprimands, orders, and, “for serious breaches of the data protection principles... fines of up to €20 million or 4% of your annual worldwide turnover, whichever is higher.”

Some businesses have complained that having “the best interests of a child” in mind when designing a product is a vague directive, especially for online services used by adults and children alike. But the ICO has been firm in its commitment to this principle. As Commissioner Denham said in her statement: “In an age when children learn how to use an iPad before they ride a bike, it is right that organisations designing and developing online services do so with the best interests of children in mind. Children’s privacy must not be traded in the chase for profit.”

“@ico has argued: _There are laws to protect children in the real world — film ratings, car seats, age restrictions on drinking and smoking. We need our laws to protect children in the digital world too._”

Tweet This

The Global State of Children’s Privacy Legislation

The UK’s code is notable for going further than previous laws in protecting the digital privacy of minors, but it’s far from the only such law on the books.

The United States passed COPPA in 2000 and then amended it in 2013. This law prohibits online services from collecting the personal information of children under 13 without parental consent. COPPA applies to services that are designed for children or that have “actual knowledge” that they are collecting data from children.

The California Consumer Privacy Act (CCPA) is broadly consistent with COPPA, with a few tweaks. Under the CCPA, businesses can’t sell the personal information of minors under 16 without permission. If the child is under 13, permission must be given by a parent or guardian, but minors between 13 and 16 can opt into data sales themselves.

Other states, including Connecticut and Massachusetts, attempted to pass similar legislation in 2019. Thus far, those bills have failed to pass, but with more data privacy legislation being cooked up all the time, the trend isn’t going anywhere.

Meanwhile, across the pond, the General Data Protection Regulation (GDPR) requires parental consent to process the data of children under 16, although member states can modify that to be as low as 13. In addition, the GDPR applies to data collection, not just data sales. Unlike the CCPA, it provides no exemption for organizations that claim they are unaware a user is a child.

“Children’s data privacy scandals recently hit @ring @youtube. Find out why children’s privacy is driving #dataprivacy legislation in 2020.”

Tweet This

For Businesses, Authorization Is Central to Compliance

All the laws we’ve discussed demand that online services treat users differently if they are children, but this raises the question: How do you know the person on the other end of the screen is a child? For that matter, how do you know that the person giving their consent for data use is actually their parent or guardian? The answer is a matter of authorization.

Most people who share streaming accounts with their families have seen authorization separate the adults (who will be recommended the latest Scorsese drama) from the children (who will be steered toward My Little Pony). Media companies do this by creating multiple user profiles under a single account, enabling greater personalization and ensuring that kids’ profiles are treated differently.

There are several authorization models for accomplishing this, which can support varying degrees of complexity and customization. For example, in the Attribute-Based Access Control (ABAC) model, permissions are assigned based on their attributes, including age. In contrast, in the Relationship-Based Access Control (ReBAC) model, permissions are assigned based on relationships, which enables policies that reflect real-world user dynamics.

Even with social mapping, it can be tricky for companies to verify the relationship between an adult and child. Most companies consider the person who can pay to be an adult, and so they allow the subscription holder to indicate which profiles belong to children. The ICO also lays out identity verification methods for determining the age of a user, which businesses can choose from based on their resources and the risk level of the data they’re collecting:

Self-declaration, in which a user states their age but gives no other evidence. This is suitable only for low-risk situations

Artificial intelligence gleaned from observing how users interact with the service. This method provides a greater level of certainty but has privacy pitfalls of its own. Under the ICO, businesses that go this route must inform users up front and adhere to the principles of data minimization and purpose limitation for all data collected this way.

Third-party age verification services: These services use an “attribute” system to confirm the age of a user without the website or app needing to request additional personal information. That presents an advantage for businesses, but you must be careful to ensure that these third-parties are reasonably accurate and that they themselves are not violating data protection laws.

Account-holder confirmation can be employed in cases where you know an existing account holder is an adult, so they can confirm the age of additional users through an email or text of a known device.

Technical measures can strengthen self-declaration. For example, if a user is denied access to a service for being too young, you may prohibit them from immediately resubmitting the form with a different age.

Hard identifiers are formal identity documents, such as passports. This is an option of last resort because some children don’t have access to such documents, and requiring them may be discriminatory to those users.

Children’s Data Privacy Takes a Village

The push for giving specific data rights to children shouldn’t discourage organizations from designing products that cater to them. Nor should organizations use data privacy to lock kids out of the digital world; rather, they should help kids become thoughtful participants in that world. Hopefully, these laws will inspire companies to be more thoughtful in crafting policies that respect children’s curiosity as well as their safety.

About the author

Adam Nunn

Sr. Director of Governance, Risk, and Compliance (Auth0 Alumni)