Introduction

Being in the AI startup space isn’t just about building innovative solutions – it’s about building a secure solution, balancing security with speed, and making the right decisions to sell and scale your business.

Auth0 for Startups and Datadog for Startups provide you with the resources to implement and prioritize identity security and data security for your early-stage business.

During SFTechWeek 2024, we brought together a panel of experts to share their perspectives on AI tools:

- How does an engineering leader work with AI tools securely?

- How does a startup founder approach building an AI business?

- How does an investor evaluate up-and-coming AI startups?

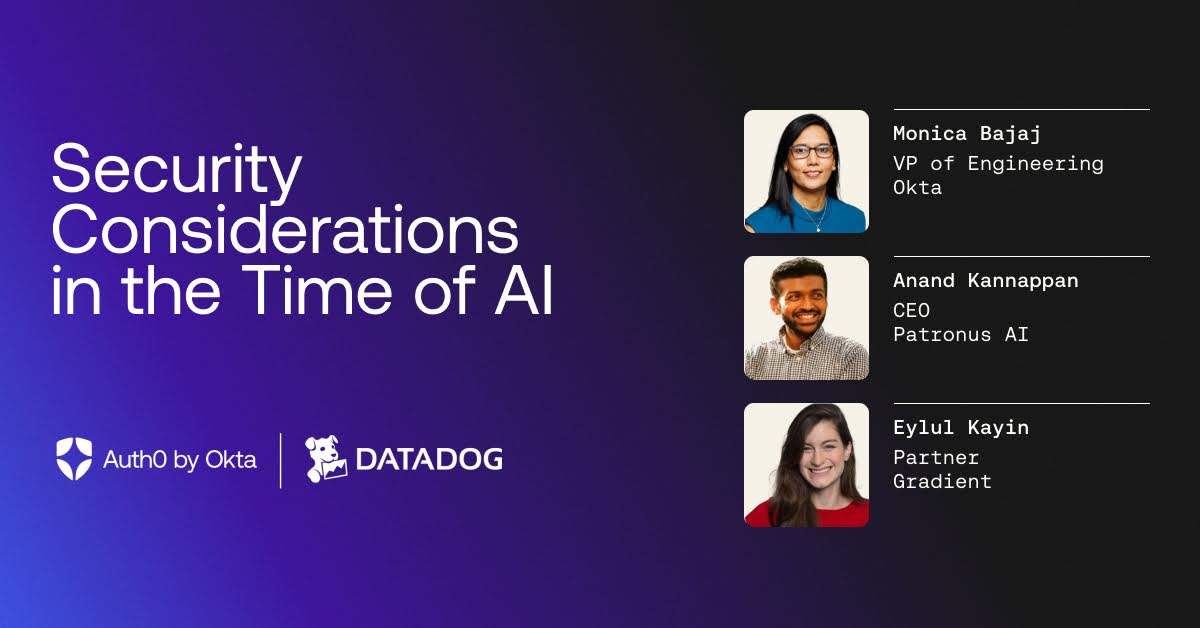

Meet the Panelists

Monica Bajaj is the VP of Engineering @ Okta and leads the developer experience for Customer Identity Cloud and the Fine Grained Authorization portfolio at Okta.

Anand Kannappan is the CEO @ Patronus AI, which is a Series A company that helps companies scalably and reliably catch failures with AI products like hallucinations, unexpected behavior, unsafe outputs, etc. Patronus AI is also an Auth0 and Datadog customer.

Eylul Kayin is a Partner @ Gradient, which is a pre-seed to series A fund, focused on AI.

Here’s what we learned!

Please note that the following statements may be paraphrased and are not direct quotes.

Our Favorite Moments

- “We are solving so many problems in AI through AI, but how many companies are looking at it from an ethical perspective? This is really important. The ethical considerations while building your AI tool and deploying it in the companies using it are crucial because that's where the majority of disruption to humanity is going to come.” - Monica Bajaj

- “Even if you're moving at 200 miles an hour, just make sure you're driving with a seatbelt.” - Anand Kannappan

- “The goal is to create a full platform that can increasingly automate processes as the underlying infrastructure improves.” - Eylul Kayin

- “We're currently in that "New Hope" era for AI, where a lot of what we're doing today with AI is enabling us to rethink how we interact with autonomous systems, whether it's how we work together or how we coexist.” - Anand Kannappan

- “Going from zero to one should be a priority, but it’s crucial to ensure that customers are genuinely adopting the product and that there’s solid network engagement before scaling further. Honestly, going from zero to one is easier now than it was before, but the real challenge lies in going from one to five, one to ten, and beyond.” - Eylul Kayin

- “Before jumping to the conclusion that we can build everything in-house—something that engineers often think—I ask myself what will make my team more efficient. If it’s not part of our core business, do we really need to build it, or should we buy it?” - Monica Bajaj

Advancements in AI

What are you most excited about regarding the advancements of AI tools in the dev space?

Eylul:

The most widely adopted AI-powered developer tooling right now is Codegen, right? At Google, we're definitely seeing a lot of productivity lift that it's creating, shortening the development cycles. There's a lot of conversation around its high usage and the revenue that it's generating. There's definitely a lot of excitement in the VC ecosystem as well to continue funding Codegen competitors. There's almost $1-2 billion raised at an $8 billion post-money valuation.

A second thing I'm looking at is how we think about security if all this code is written by AI – incident analysis is important. However, there's a lot of interesting stuff happening in areas like SaaS and SCA remediation. AI Ops is a whole other thing! There's a lot of room to improve automation on config and log analysis, and there's a lot of excitement in that category as well.

Anand:

I've been super excited about the shift in how people think about language models in a research setting and how they are being applied to real-world use cases. The biggest thing I’m most excited about is how we're entering an increasingly autonomous world. I think we’ve all enjoyed how fast the entire space and market have moved.

I like to compare this to the Internet era from, let's say, 20 years ago versus today. Matthew Prince, the founder of Cloudflare, once talked about how the early days of the Internet were sort of like the Star Wars movie “A New Hope,” where there was a revolutionary force challenging traditional power structures. But nowadays, it's more like the Star Wars movie “The Empire Strikes Back” in terms of the Internet because there are many existing entities and companies that are very restrictive and controlling of the environment. The difference is that we're currently in that "New Hope" era for AI, where a lot of what we're doing today with AI is enabling us to rethink how we interact with autonomous systems, whether it's how we work together or how we coexist.

Monica:

The way I look at it is that technology has become very human-like. We are all talking, speaking, reading, and now developing in natural language. So that is the most important takeaway in terms of the development space. As you pointed out, code generation and code completion are definitely being widely used. Automated testing has always existed, but today, we have intelligent automation tools that can read your code and help generate tests.

Another is AI in DevOps, which is crucial, and then there’s content generation. As you build a product, the content must go hand in hand with development. Documentation needs to be integrated in such a way that users can self-serve effectively. Intelligent, code-based documentation that reads your code and generates snippets from a developer's perspective is very important.

Security Considerations

What security considerations are top of mind for you [in your role] when it comes to AI tools?

Monica:

The way I see it, when it comes to security concerns, I categorize them into three different buckets:

- Data security

- Model security

- Infrastructure security and Human-in-the-loop

The first is data security. This involves data governance—how you collect, store, use, and dispose of data. These four elements make up the data lifecycle. Data can often be manipulated in two main ways. One is from a security standpoint, where issues like prompt injection or data poisoning can disrupt the integrity of the data. Another way is through data augmentation, where you add synthetic data to help models improve and become more robust.

The second category is model security, which is crucial. When models are being built, it’s important to consider gradients. If parameters change, it may allow attackers to deduce how your model infers results. Gradient masking is a significant technology used to prevent attackers from understanding model predictions.

Infrastructure security and Human-in-the- loop: Infrastructure security is another key aspect, focusing on how you deploy models and manage data in use, at rest, and in transit. It's essential to ensure that the data centers where your models are hosted are tamper-proof. I would also emphasize the importance of the human-in-the-loop concept, where humans and AI collaborate to enhance the explainability of AI, making the overall system more secure.

Anand:

If you're curious about this topic, you should look up OWASP (Open Web Application Security Project). They have a list of the top vulnerabilities, which previously focused on software vulnerabilities. However, a new list was released in 2023 that specifically addresses LLM (Large Language Model) vulnerabilities. This includes some of the issues Monica mentioned, like prompt injections, over-reliance on code, insecure output handling, and other AI-native vulnerabilities, such as model theft.

At Patronus, we also hear developers discussing vulnerabilities within specific types of LLM systems, like retrieval systems, where they talk about preventing issues like context injections. What’s unique about our current environment is that security in this new era is no longer just about third-party actors and adversarial threats; it’s also about accuracy and reliability.

When we work with customers, we emphasize that both aspects need to be considered as much as possible. What’s also different about our approach at Patronus is that security isn’t just a defensive strategy; it’s an offensive move. The more you think about security from day one, the better positioned you'll be to make proactive decisions that put your AI product in a strong place for the long term.

Eylyl:

One thing I’ll add is that a lot of the adoption of generative AI features in large enterprises and mid-sized markets has primarily focused on search functions or adding chatbots. We’re now at a point where we’re empowering these agents to perform various functions across the enterprise, integrating with all sorts of communication channels and systems.

I believe permission becomes a very interesting consideration because, as the tasks of AI agents evolve over time and in context, you need to be very mindful and thoughtful about how to manage that. While this may not be a standalone company, it’s an important feature to consider when thinking about enterprise pricing and bundling.

If you're an early-stage founder and you’re aware of all these risks, how do you go about implementing security measures? What resources do you rely on daily to ensure that your product is secure?

Anand:

A major focus of our product is to help companies ensure that their AI products are secure. We recommend best practices in two main areas. First, it's important to establish guardrails and evaluators specific to your use case. Second, there are general safety and security considerations, such as content moderation.

For the first point, focusing on capabilities means creating custom evaluators that address potential failure modes. For example, if you’re a bank developing a chat assistant for finance, you want to ensure that the assistant doesn’t provide any financial or investment advice, as that could violate SEC regulations. You could create your own LLM judge or another intelligent mechanism to prevent this from happening or, at the very least, to detect when it does.

Regarding the second point, safety and security guardrails involve adhering to the right standards, like OWASP, among others. It’s crucial to implement appropriate validations in both online and offline settings to prevent failures from occurring.

Common Mistakes in Building, Selling, or Fundraising

What are common or notable mistakes that AI companies make in the process of building, selling, or fundraising for their products?

Eylul:

One common mistake is building in an area that is very close to where the large foundational models are operating. Resources are often constrained from both a capital and talent perspective, making it difficult to keep up.

Another observation I’ve made, especially in the early-stage space, is that the period from ideation to some form of commercial deployment—especially on the app layer—is the shortest it has ever been. Many of my portfolio companies or others I encounter have what I refer to as an "experimental revenue run rate." There’s a lot of interest in trying out their products, but I encourage everyone to focus on achieving product-market fit.

Going from zero to one should be a priority, but it’s crucial to ensure that customers are genuinely adopting the product and that there’s solid network engagement before scaling further. Honestly, going from zero to one is easier now than it was before, but the real challenge lies in going from one to five, one to ten, and beyond.

In my discussions with Monica earlier, I mentioned that my team and I see about 10 to 20 companies a week, and you probably see around 100. We’re generally a high-volume group, and a significant portion of the companies selling generative AI-enabled products aren’t quite there yet. Particularly when selling to relatively unsophisticated users, a product that works 80% of the time often leads to customers being willing to pay 0% of the time.

One way to mitigate this is by offering a service product that is AI-enabled, though this can put the repeatability of the sales process at risk. For example, one of our portfolio companies decided to turn this challenge into an opportunity by providing an end-to-end service and monetizing as many steps as possible.

This company has a tool that allows employees to ask questions about policy documents. While the AI can’t answer questions 100% of the time, when it fails, the system upsells a ticketing service that elevates the question to a human. This way, they ensure that questions are answered as efficiently and automatically as possible.

If the tool stops working, they still deliver the service by having humans step in when necessary. The goal is to create a full platform that can increasingly automate processes as the underlying infrastructure improves.

Earlier, you mentioned some key factors, like product-market fit. These are relevant for any type of tool, right? Are you seeing any trends where AI startups tend to rush through the early stages of building their products, or do you think this applies to any type of product across various industries?

Eylul:

I think what we're experiencing now is very similar to the transition to cloud computing. The underlying framework for building software has changed—it's faster, easier, and cheaper to create new products. The key question becomes: where does the value ultimately accrue? A lot of it lies in the infrastructure, but the companies that succeed are those able to develop products that go the last mile, effectively serving very specific use cases that customers are reluctant to build in-house. While it may not provide a competitive edge, it addresses a sticky and important need.

In my observation, there aren’t many differences in how you build a successful business; it’s just becoming easier to create these products. As a result, the barrier to entry becomes a more significant concern for investors. The best way I’ve found to approach this is by identifying companies with strong go-to-market momentum—those that truly understand their customers and have been thoughtful about developing unique sales strategies.

AI Tools for Efficiency and Productivity

How do you decide which tools to use to increase efficiency and productivity?

Monica:

From a builder's perspective, I always start by considering what my core business is. Before jumping to the conclusion that we can build everything in-house—something that engineers often think—I ask myself what will make my team more efficient. If it’s not part of our core business, do we really need to build it, or should we buy it? That’s one key thought process.

Another important consideration is the specific problem I'm trying to solve, rather than just gathering a bunch of tools.

With AI tools entering the picture, it's crucial to focus on security and privacy since data is constantly moving. Compliance is equally important.

From a usability standpoint, I want to ensure that any new tool we build or buy is easy for users to onboard. It should be customizable because developers prefer pre-built packages that they can also extend to meet their needs.

Support and integration capabilities, along with well-structured and up-to-date documentation, are additional factors I consider when proposing or introducing any tool for the team.

Anand

I would add that we focus on three main considerations when deciding whether to pay for a new tool, especially an AI tool.

- First, does it save us time?

- Second, does it save us money?

- And third, does it generate revenue for us or help us generate revenue?

Understanding which of these buckets a tool falls under will guide how much we should be willing to spend.

In the context of AI tools, it’s crucial to conduct thorough research on potential alternatives and what makes sense for you and your team. We're in a very noisy market where everyone is promoting their products, and things are evolving rapidly.

Have you had any experiences with newer AI tools where you decided to explore them, perhaps by building a proof of concept, and later realized it wasn’t worth the investment? Or do you have a thorough process in place to evaluate them beforehand?

Anand:

From our perspective, we’ve explored a few different tools. Many of these are usage-based, like OpenAI and other language models. In other cases, we've conducted more structured trials, particularly in the data annotation category. Here, we work with companies that contract annotators to help us develop new evaluation datasets or train models.

In these situations, we do some vetting to ensure that the individuals involved in the project are qualified, which helps us achieve high-quality results.

Monica:

We have done the same thing when we decided to implement code completion. Being a company focused on security, it gets a little harder for all the right reasons. So, we started with a proof of concept (POC) first, and then we monitored usage. If the uptake was significant, we expanded it further. The usage, engagement and the results were the three key areas we considered before rolling out a tool more widely.

Inventing in AI Tools

How do you decide which AI tools to invest in?

Eylul:

Since our fund is AI-focused, we are ultimately interested in investing in businesses that innovate in this realm. However, we also invest in strong companies, which can sometimes come from passionate technical founders who may have a sales background or who are partnered with someone who can drive sales, particularly in the early stages, like the first $2 million of the company’s journey.

Personally, I’m less concerned about having a thematic approach that covers all segments. As a multi-stage fund, it might be easier to look at multiple companies in the space and then identify the best founder to invest in. However, we don’t have that option, so we make quick decisions and invest in teams and their drive. I don't have a specific answer for the types of tools we focus on; we aim to avoid rigid categorizations as much as possible.

Challenges for Customers

In terms of preparing your products for customers who might be more wary of AI tools, how do you approach integrating AI into your offerings? What steps do you take to ensure a smooth transition?

Monica:

When preparing to integrate any tool, particularly an AI tool, the first and foremost consideration is security. It's crucial to evaluate how secure the tool is. Additionally, we need to ensure compliance with various global standards and regulations, such as GDPR and CCPA, especially given our global reach.

Another important factor is the tool's extensibility; it should be adaptable to our needs. From a security perspective, we also assess how tamper-proof the tool is and its overall success rate. Lastly, while revenue considerations are important, I focus primarily on whether the tool will make my teams more efficient and productive.

Eylul:

I realize I want to add something to my answer that I've been grappling with, and I’d be interested in your thoughts as well. Much of the current conversation around AI focuses on cost reduction, but whether the market is ready for outcome-based pricing is still uncertain. Personally, I lean toward finding AI tools that enhance uptime or generate revenue. I believe that focusing on price and demand elasticity often leads to a stronger business model compared to solely trying to reduce costs, which can result in a price spiral.

That said, I don't think we should ignore innovative ways to cut costs. However, I see that as a more challenging endeavor, especially in an increasingly competitive environment where it’s becoming easier to build new products.

Monica

You raised a good point. What I wanted to emphasize is that when teams become productive, they gain the capacity to tackle other tasks. This highlights a common challenge: every company, and especially startups, often wrestles with the question of how to ship faster and release products to customers. This concern is always top of mind. If teams can achieve that, it ties directly back to revenue generation; selling faster means generating revenue more quickly. Therefore, if we use AI tools wisely and for the right reasons, there is significant potential for growth.

And do you feel similarly at Patronus with the size of the company that you have?

Anand:

Given that the market we're in is relatively young, there's definitely a lot of confusion among companies looking to invest in AI products regarding what they actually need. I recommend starting with a clear understanding of the success criteria for the product you're considering. Earlier, it was mentioned that if a product is only 80% effective, maybe you shouldn't pay for it at all. It's crucial to focus on the metrics that matter most to you and define them upfront. Otherwise, confusion can arise about what constitutes success, which can slow down decision-making for companies.

Risks

What risks do companies, products, and customers face?

Anand:

In terms of risks, I think it covers a lot of the different kinds of things that we talked about earlier in terms of broad capabilities for the specific use cases you're developing, along with things that are more general, especially across safety and security. The thing that I always recommend is that it's really important to look at the data. Especially if you're planning to invest in a security tool, particularly in the context of AI, it's crucial to ensure you're doing the right kind of benchmarking to understand how well this actually performs for your specific use case. And, of course, this is something you should also do for any language model or language model system you're using internally as well. An example of this is at Patronus, where we actually published a blog post a few weeks ago about Llama Guard being a very ineffective tool for content moderation in terms of toxicity. We found similar results with Prompt Guard as well. We're seeing developers start to understand that they need to do this kind of analysis early on before they invest in something, especially if it's open source. We can go ahead and address risks, but make sure you're doing that in the right way.

Monica:

Piggybacking on that, as you mentioned about toxicity, we also need to check for bias and discrimination in the models, which is very common and needs to be addressed. The other part is ensuring that the model is not prone to adversarial attacks. To achieve this, many of you may know about chaos testing; it’s similar to providing adversarial training to models. This approach acts like chaos testing for models because you want to ensure that they are well-hardened and robust in terms of reliability and performance. So, that’s going to be really important.

Do you find that since we're talking about open-source models, they are less reliable or that they require more testing than non-open-source models?

Monica:

From an open-source perspective, there are so many models available these days, and I can't definitively say whether they are good or bad. People need to understand what each model does, test it out, and then make informed choices, especially given the vast array of options available right now. The market is quite chaotic, and for startups, as well as public companies, using open-source models makes a lot of sense. Why not take advantage of what’s out there?

Anand:

We actually published some research on this at the end of last year on arXiv called "Simple Safety Tests,", where we tested a suite of open-source models, especially in the context of safety. At that point, we found that, on average, state-of-the-art open-source models had an average attack success rate of over 20%. That number has certainly decreased over time. I used to work at Meta, and I remember that the team focused on safety for language models was much larger than the team working on pre-training those models. This highlights the fact that safety is a challenging problem that needs to be taken seriously. We're starting to see that gap close over time.

Guardrails and Safety Recommendations

What guardrails should be in place to protect user data? What safety recommendations (if any) do you have for your developers or customers when enabling them to use AI tools?

Monica:

Dos and don'ts have to be established. For example, it's crucial not to input your company data into these models, as you cannot know how that model will use or leverage that data elsewhere. That's number one.

The second important aspect is understanding where you can use the model and where you cannot use it in your application. This ties back to the privacy rules that your engineers must follow, which is very important.

Additionally, extensive testing is necessary before deploying the AI tool publicly, including a proof of concept where a group of people can assess and feel confident that it is ready to be rolled out. That's a key aspect.

Anand:

From our perspective, I'd say it's very similar. We have internal processes that prioritize security and compliance. For example, we conduct frequent penetration tests to ensure that various parts of our product are not exposing risks to any of our customers.

A significant aspect of this is one of our product offerings, which includes on-prem deployments. In recent months, we've noticed a growing demand from companies worldwide for more on-prem solutions, with some even reverting from cloud to on-prem due to the additional set of risks associated with AI. Therefore, it's crucial to ensure that if you're going to use an AI vendor, it is in some way self-hosted or located within their premises. These are the measures we develop on our team to support our customers.

Advice for Startups

What is your advice for AI startup founders looking to fundraise at this time?

Eylul:

This is a little bit of a hot take, but I think we're seeing that the idea of raising hundreds of millions of dollars upfront to buy a bunch of GPUs and run your own model has not turned out to be a phenomenal way of growing a venture-backed business. For some, it has worked, obviously, if you're among the top two or three.

I think back to two years ago when the hottest company to invest in within the agentic workflow space was Adept, which raised $400 million to run its own foundation model. Then, a few months later, a wave of competition emerged, utilizing smaller models in orchestration. Granted, many of these companies are still in the demo stage, and it’s hard to argue that they are operating at the level their marketing suggests.

Another interesting thing I've noticed, having wrapped up the most recent YC cohorts, is that there are so many companies now offering their own sort of agentic workflows. One of the more sought-after ones even provided an ID for people to build their own agents. This highlights the importance of being very thoughtful about how the underlying technology is evolving.

Competition is fascinating to consider when building. It's crucial to be mindful of how much you need to raise and to understand the implications for your runway. What constitutes appropriate traction for pre-seed, seed, and Series A is constantly evolving and really depends on the sector. Keeping a good pulse on the ecosystem and having a mix of both angel and non-assistant investors on your cap table is a great way to keep learning about these dynamics.

Anand:

One thing I would add to that, especially if you are looking to fundraise in this market, is that it's really important to focus on the narrative and to be able to tell a compelling story when you're fundraising. Of course, that starts with the story you tell your team and yourself in terms of where you're at today and where you're going next. Given how fast things are moving in the market we're in, it's crucial for you to be very crystal clear about what things are going to be stable. That's what you should bet on because you're building a company for the long run, not something that is changing every day in terms of your product experience or the kinds of customers you're serving. Making sure you really understand and focus on the narrative is vital.

Especially in the current market, it's important to tell that story in the context of two key points: 1. It's a really large market, and 2. It's a very fast-growing market.

The last thing I want to mention is that a lot of questions come up about whether there are certain kinds of metrics you need to hit when you are going to fundraise. I definitely agree that metrics are important, but market leadership is also crucial in an earlier category. Being able to position yourself as a market leader is important, not just in fundraising but for your company in general.

Hot Takes

What are your hot takes on AI tools, companies, or the industry in general?

Monica:

I have two hot takes. One is that, in general, companies are still trying to figure out how to make money from AI. This is something that many companies have yet to solve. For large enterprises, a lot of money is going into this business problem. I mean, so much money is flowing from VCs to AI companies. It feels like every company, if they want funding, can just put ".ai" in front of their name, and they will get money right now. The reality is that we are still trying to figure out how these companies are going to make money for the investment. That’s definitely at the top of my mind.

The second hot take I have is that we are solving so many problems in AI through AI, but how many companies are looking at it from an ethical perspective? This is really important. The ethical considerations while building your AI tool and deploying it in the companies using it are crucial because that's where the majority of disruption to humanity is going to come. While I'm in favor of AI big time, it has to be balanced with ethics in order to make it successful.

Anand:

I might add two hot takes as well. So, number one is, I would say that AI tools are just that—they're tools at the end of the day. We're pretty much in a world where a lot of developers around the world, at many companies, are learning about what machine learning is for the very first time. So, what I recommend is to not just think about the product experience and whether you're hitting good retention metrics, but also how to ensure you have an extremely good customer experience that is very high-touch. I think we've all seen the news about Accenture and how their services business has done extremely well over the past year. It's a different market compared to, let's say, traditional software markets for that exact reason. So, I'd say it's important to be obsessed not just with how successful your product is but actually with how successful your customers are with their AI products. That’s my first hot take.

And I'd say the second hot take, which was about companies, is that, especially if you are currently at an early-stage company or hoping to join one, there might even be a positive correlation between how successful a company is and how chaotic it feels internally. I'd say that’s the case for pretty much any size of company, but especially for startups. So, definitely make sure you embrace the chaos. Even if you're moving at 200 miles an hour, just make sure you're driving with a seatbelt.

Eylul:

As I spend so much of my time thinking through a lot of these workflow automations, it makes me consider what’s going to happen to SaaS, right? I think so much of what we consider traditional SaaS is ultimately a human-machine interface for business personas to interact with a database, right? If I'm able to query things on my own as someone sitting in marketing and fast forward, all of a sudden, I'm able to replace all the CRM functionality with a number of these email agents that we're looking at right now. Ultimately, why would an enterprise need to pay for that mid-layer of the CRM? My mind goes to Klarna, which has recently moved away from Salesforce, and I think about Workday as well. What’s going to happen to that middle layer? What’s the role of anyone sitting through that stack, from the business persona to the engineer to the IT ops? How are those roles evolving over time? I think it's really interesting, and we'll watch.

For Startups

That’s all we have for now! If you’d like to continue learning about this topic, check out our Security Considerations in the Time of AI Engineering > in which we explore potential risks of using AI tools in the development process, plus questions you could ask yourself to help make more informed decisions – for you, your product, and your customers.

This event was organized by Auth0 for Startups and Datadog for Startups. As a reminder, Auth0 for Startups offers eligible startups one year free of the Auth0 B2B Professional plan with support for 100k monthly active users. Datadog for Startups offers AI startups under two years old who attended the event one year of Datadog Pro for free - regardless of funding. 🎉

We want to give a huge thank you to our panel. See you at the next event!