As companies race to enhance their digital offerings with AI agents, they're also facing a fundamental question of consumer trust: How can we tell customers they can trust our AI agents with their most sensitive data—and mean it?

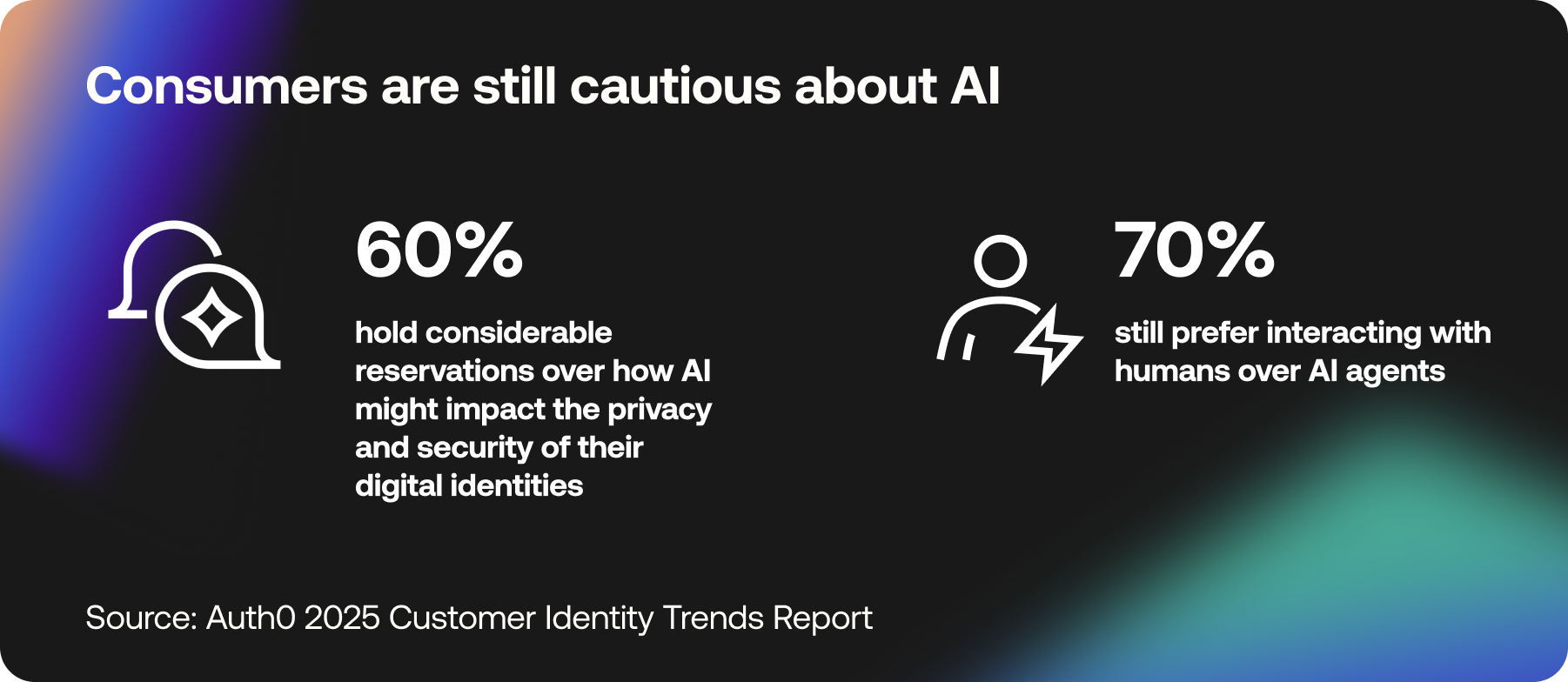

The Auth0 Customer Identity Trends Report 2025 found that although 37% of consumers are already using GenAI tools, considerable trust issues remain. 70% of people still prefer interacting with humans over AI agents, and 60% have considerable reservations over how AI might impact the privacy and security of their digital identities.

As AI agents become more autonomous and more far-reaching in their functionality, one thing is clear: customers will only trust them (and the companies deploying them) if security is handled correctly from the start. And as AI-empowered threats continue to increase in both frequency and potency, gaining that trust will only become even more pertinent.

Customer identity and access management (CIAM) can offer unique value when it comes to managing these threats, maintaining consumer trust, and securely deploying AI agents.

Here are four foundational identity practices for building safe, scalable, and trustworthy AI agent experiences for your users.

1. Get authenticated before getting to work

To act autonomously and securely, AI agents must be firmly tied to a verified user identity that has undergone sufficient authentication before acting on behalf of customers. Without secure up-front authentication, AI agents risk gaining inappropriate or unnecessary access to sensitive data, making unwanted decisions, or triggering processes without explicit user consent.

The stakes are high: 44% of users who don't trust AI agents cite concerns about personal data security. If users feel like they’re handing over access without verification, they'll walk away.

How modern CIAM can build trust:

Implement seamless but strong authentication before the AI agent takes action. Passkeys, biometrics, and adaptive multi-factor authentication (MFA) are the kinds of modern methods that build user confidence without adding too much friction and they elevate your overall security posture by eliminating common vulnerabilities such as passwords and static credentials. With AI agents operating at scale, removing these weak points is critical.

Customers want security that is easy to walk through but tough to break through, and authentication is where that all begins.

2. Know the keys on your AI agents' keychain

AI agents are powerful because they are connected to stores of information. They can check inventory, send messages, look up user data, initiate workflows, and more—all by tapping into your APIs and backend systems. But that same connectivity creates security risks if not appropriately managed.

Without proper controls, agents can overstep. They may pull the wrong data, call the wrong function, or hold on to outdated permissions. If tokens are hardcoded or mismanaged, attackers can exploit them to impersonate an agent or move laterally through your environment.

How modern CIAM can build trust:

Maintain strict adherence to the principle of least privilege and give your AI agents only the permissions they need. Vault and rotate their access tokens just as you would for privileged users. Define context-specific protocols for what they can access, how often they can access it, and under what conditions. Every API should be monitored, rate-limited, and tied back to an authenticated agent identity.

3. Running on trust? Make sure it hasn't expired

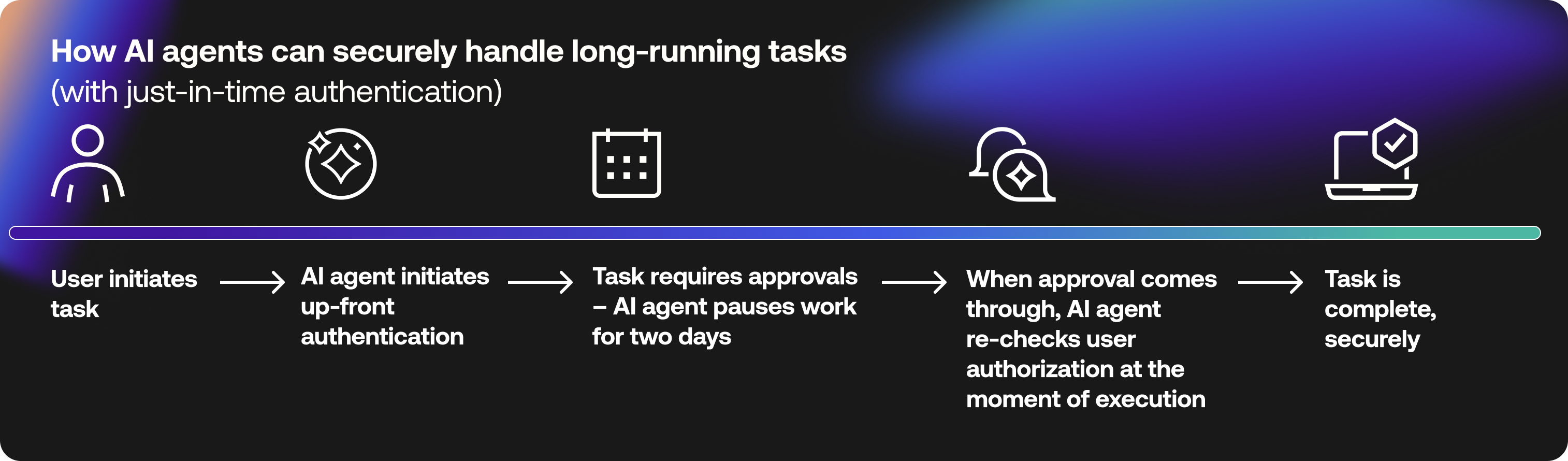

AI agents don't always finish tasks right away. They may wait for data, hold for approvals, or perform actions hours (or even days) after the user first initiates an interaction. This creates a serious security challenge: How do you know the request is still valid when the system executes it hours or days later?

Current security models aren't built for how AI agents work. Traditional session-based authentication expires, leaving agents either locked out or, more dangerously, still holding open access long after the user has moved on. This open-ended access is low-hanging fruit for bad actors looking to exploit vulnerabilities.

How modern CIAM can build trust:

Leverage just-in-time authentication to re-validate agents handling delayed or asynchronous workflows. This ensures that even if a task was triggered hours, days, weeks, or even months ago, the agent re-checks user authorization at the moment of execution. It also minimizes open-ended risk exposure by closing access when it's no longer needed.

For example, imagine an AI agent receives a customer's order for an out-of-stock item. When inventory is replenished two weeks later, the agent re-authenticates the user to confirm the request is still valid before fulfilling the order.

4. Keep AI agents in their lane

AI agents can do a lot, but that doesn't mean they should. One agent may be built to retrieve order history, while another handles refund approvals, but if they all share the same broad permissions, you've created a system where a low-order agent is empowered to make high-impact decisions it has no business making.

Without firm guardrails in place, AI agents are prone to "privilege creep", gradually gaining access to systems and actions far beyond their original purpose. The results can be disastrous, leading to unapproved actions that severely undermine customer trust.

How modern CIAM can build trust:

Apply least-privilege principles to every AI agent. Start by defining roles that align with each agent's function. Then, utilize fine-grained authorization to dynamically update permissions for any changes based on the task, user behavior, and risk. It's also important to regularly audit and adjust agent roles to reflect changes in the business or scope. Agents are increasingly autonomous, but they still need supervision.

Trust shouldn't be bolted on

We're firmly in the AI era now, and in this era, trust must be built in, not added on later. That means treating AI security as an identity challenge from the word "go". Users want to know: Who's acting on my behalf? What are they doing? And how do I stay in control?

By focusing on authentication, access control, long-running task management, and fine-tuned authorization, organizations can introduce AI agents that are both useful and secure. With the right identity security measures in place, companies can turn their AI agents into another opportunity to build and maintain trust.

Discover how Auth0 can help secure your AI agents. Visit auth0.com/ai.

About the author

Michelle Agroskin

Staff Product Marketing Manager, Auth0

Michelle Agroskin is a Staff Product Marketing Manager for Okta. She leads the Customer Identity AI, Authorization, and Consumer portfolios; driving go-to-market strategy, messaging, launches, and strategic growth initiatives.

She lives in NYC and in her free time, loves to travel the world and try new restaurants!