Developer’s building with AI have probably had this thought when a new standard like Anthropic's Model Context Protocol (MCP) comes along: "This looks interesting, but why can't I just use an API like I always have?"

It's a fair question, and the logic holds up on the surface. Why not just give a large language model (LLM) an OpenAPI spec and let it figure out how to make the right requests?

The problem is that this approach runs into some major real-world roadblocks. An interface built for a human developer to write code against fundamentally differs from a protocol an AI agent needs to reason with.

Here’s the bottom line: a traditional API is a set of instructions for you, a developer. MCP is a language for your AI agent. Let's break down why that distinction is so crucial for building applications that actually work.

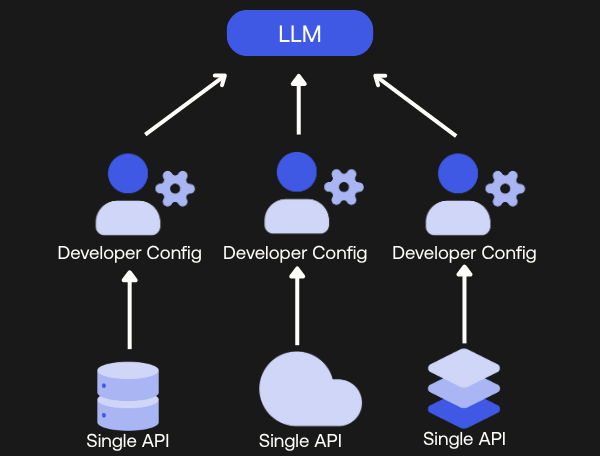

Where Traditional APIs Fall Short for Agents

Using a standard API to give an LLM a new capability can feel like a square peg in a round hole. Here are some of the real-world friction points you'll run into.

1. The "Paradox of Choice" Kills Performance: You'd think giving your agent more tools would make it smarter. It doesn't. It just makes it more confused. An enterprise API can easily have 75-100 endpoints, and LLMs are surprisingly bad at choosing from a long list of options. Faced with too many choices, they slow down, struggle to differentiate between similar tools, and often pick the wrong one or fail to act at all. You're then forced to manually curate a tiny list of tools, which defeats the purpose of having a rich API in the first place.

2. The Manual Translation Burden: Your API docs are written for a human developer who can infer context and Google things. An AI agent can't do that. So, you become a full-time translator, writing a wrapper for every tool and meticulously describing its purpose, parameters, and output. This isn't just tedious; it's a brittle system that breaks every time the underlying API changes.

3. Your Agent is Flying Blind: Your agent can't ask your API, "What's new?" or "What can you do for me?" It only knows about the tools you've hard-coded for it. This prevents your agent from adapting or taking advantage of new capabilities, limiting its potential autonomy and usefulness.

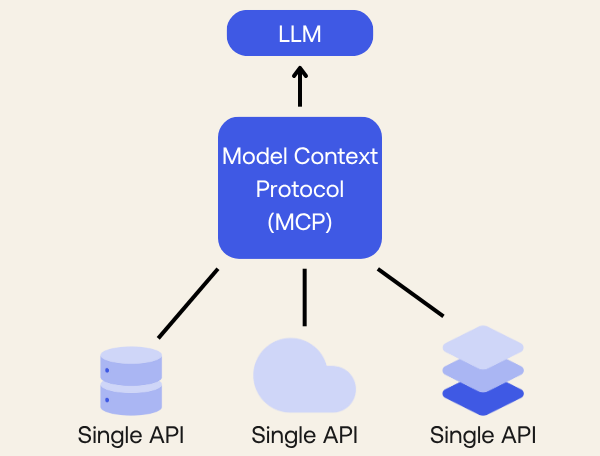

How MCP Solves These Problems

MCP isn't just a different API; it's a different approach designed specifically for how an agent "thinks."

1. It Abstracts Complexity for Better Reasoning: This is where MCP really shines. Instead of overwhelming the LLM with 100 low-level tools, you give it a handful of powerful, high-level capabilities. For instance, you wouldn't give it separate tools for createUser, updateUserAddress, and resetUserPassword. Instead, you'd offer one simple capability: manageUserProfile. Your agent invokes that one capability, and the MCP server handles the underlying logic. This simplifies the agent's job and lets it focus on the goal, not the implementation details.

A perfect example is creating an AI-native workflow that wouldn't make sense in a standard API. An LLM is bad at writing complex SQL. Instead of a single run_sql tool, an MCP could offer a multi-step database_migration capability that first stages the SQL on a test branch, asks the LLM to verify it, and only then commits it with a final command. This guides the agent to success by meeting it at its level of reasoning.

2. It Enables Dynamic Discovery: An agent can connect to an MCP server at runtime and ask, "What can you do?" It can learn about the capabilities available to it on the fly. This makes your whole system more adaptable, allowing you to add new tools without having to redeploy your agent's code.

3. It Standardizes Communication: Using the "USB-C for AI" analogy, MCP creates a universal protocol for agent-to-tool communication. This reduces the need for custom wrappers for every service, leading to a more robust and scalable ecosystem.

The Security Question: Who is This Agent, Anyway?

This new architecture brings new security questions to the forefront. When an agent can discover and use tools on its own, you need to be able to answer:

How do we ensure the agent is truly authorized to act for a specific user?

How do we enforce granular permissions so an agent can only use the tools it's been allowed to, for the user it's serving?

These aren't just theoretical questions; they represent the next real-world challenge for AI security. To solve them, we need to think about how to apply familiar identity concepts in this new agentic context. This means using established patterns like OAuth 2.0 for token delegation to give an agent specific, limited permissions and ensuring a user's identity is securely bound to the entire lifecycle of a conversation.

To learn more about the core principles of securing AI applications, explore the Auth0 for AI Agents documentation.

For a practical, hands-on example of implementing these security patterns, read our guide on how to secure and deploy a remote MCP Server with Auth0 and Cloudflare, which walks you through the entire process of building a secure bridge between an AI application and an external API.

Learn secure and deploy a remote MCP Server with Auth0 and Cloudflare

MCP Is the Right Interface for Your AI Agent

Ultimately, MCP is not a replacement for your API, but a necessary abstraction layer designed for a new consumer: the AI agent. Building an effective server requires more than just a one-click conversion of an OpenAPI spec. The emerging best practice is a hybrid approach: start with your existing spec, but aggressively curate the toolset to avoid overwhelming the LLM. Abstract your low-level endpoints into high-level, task-oriented capabilities, and commit to continuous evaluation to ensure your agent performs reliably. This thoughtful design is the key to unlocking the true potential of autonomous AI applications.

About the author

Will Johnson

Developer Advocate

Will Johnson is a Developer Advocate at Auth0. He loves teaching topics in a digestible way to empower more developers. He writes blog posts, records screencasts, and writes eBooks to help developers. He's also an instructor on egghead.io.

View profile