OpenClaw (formerly Moltbot, formerly ClawdBot) has amassed over 150,000 GitHub stars. The premise: a personal AI assistant with access to your full digital life: Signal, WhatsApp, iMessage, email, browser, everything. People bought dedicated Mac Minis just to run it.

The takeaway is clear, the most capable agent reaches across your entire technology stack, pulls data from any system, takes action in any application, executes without waiting for human approval. That's also exactly what attackers want.

The features that make AI agents useful are the same features that make them dangerous. This is not a bug to patch; it's missing a foundation.

Agents Excel by Breaking Down Silos

Modern workplaces are dense networks of employees that leverage software and services. Enterprises deploy over 100 apps on average, and many organizations complement these SaaS applications with a suite of internal applications and APIs.

In our work with enterprise customers, organizations frequently report managing thousands of internal APIs and applications across the enterprise IT sprawl. Each app is a silo with its own interface, data model, and learning curve.

For instance, in their day-to-day work, an account manager may review or update the CRM, make changes to a project tracker, combine data from three spreadsheets, cross-reference calendars, and draft follow-ups. Every task means switching context. That's friction.

Agents change this. They can jump between Salesforce, Zendesk, Gmail, and Google Calendar, updating the CRM, drafting the follow-up, and scheduling the next meeting in a single workflow. The boundaries that slow humans down are invisible to software.

This is why agents are valuable. And exactly why they're dangerous.

When your sales data lives in Salesforce and payroll lives in Workday, a compromised sales credential can't touch payroll. The service boundary is the protection. An agent with access to both removes the boundary.

Agents Can Be Riskier Than Employees

Agents inherit the vulnerabilities of both humans and software.

From humans, agents inherit broad discretionary access. They make judgment calls, operate across systems, and take actions based on context.

From software, agents inherit deterministic behavior. Find a working exploit, and it works every time. Unlike phishing a human (probabilistic, labor-intensive), attacking an agent is automatable and scalable.

Traditional software is exploitable but has narrow permissions and is siloed. Humans have broad access but require significant investment to successfully exfiltrate. Agents combine broad access with reliable exploitation.

Agents turn a collection of app permissions into a single workflow permission. A composite permission surface where individually authorized steps become dangerous when chained. Give an agent employee-equivalent access, and you get employee-equivalent blast radius, with software-like repeatability.

Prompt Injection is Remote Code Execution

From October 2025 through January 2026, researchers observed over 91,000 attack sessions targeting AI infrastructure. Systematic reconnaissance of LLM endpoints. Active attacks in production.

The EchoLeak vulnerability in Microsoft 365 Copilot showed zero-click exfiltration.

- An attacker sends an email with hidden instructions.

- Copilot ingests the malicious prompt.

- It extracts data from OneDrive and SharePoint.

- It exfiltrates via trusted Microsoft domains.

No clicks required. CVSS score 9.3. Microsoft patched it in June 2025 before mass exploitation, but the vulnerability class remains open across the industry.

If we evaluate EchoLeak through an authorization lens: the agent had permission to access OneDrive, permission to access SharePoint, and permission to send to Microsoft domains. Every individual action was authorized. The combination was catastrophic.

Prompt injection is defined as an attack vector that targets the large language model's weaknesses. In the case of agents, it expands into full-blown remote code injection, leveraging the agent's autonomy to execute an arbitrary set of tasks across systems.

Safe vs. Secure

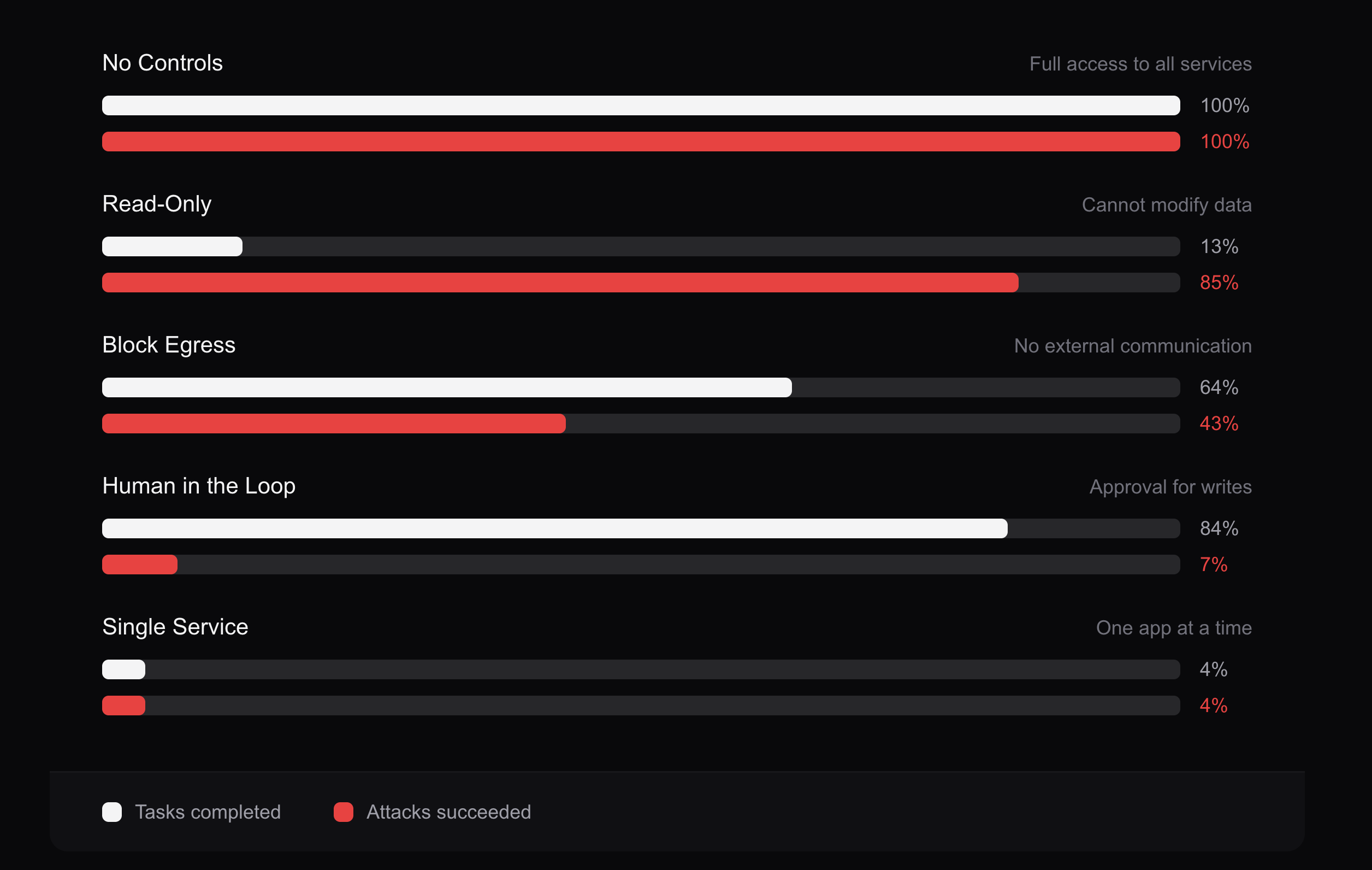

The intuitive response to reducing agent risk is to add restrictions: read-only modes, egress blocking, mandatory human approval for sensitive actions. Reduce the attack surface, reduce the risk. It's the standard security playbook.

We ran attack simulations to test this assumption.

The more services an agent can access, the more useful it is, but also the larger the attack surface. Restricting an agent to one system makes it safe but useless. You've just rebuilt a single-purpose app with extra steps.

The tradeoff curve is brutal. At one extreme: agents with broad access that can actually do work, but are vulnerable to prompt injection cascading across systems. At the other: agents that can't be exploited mimic applications.

Users aren't waiting for security to catch up. OpenClaw's success isn't despite the broad access: it's because of it.

You cannot rely on the agent to decide whether it should take a dangerous action. You must prevent it at the authorization layer.

The answer isn't choosing a point on this curve. It's changing the curve entirely.

The Authorization Inversion

For 20 years, IAM asked two questions: who are you, and what can you do? This architecture fundamentally relies on the isolation provided by applications.

We can no longer rely on identity and coarse-grained access control alone. For secure agents, we need a task-based authorization model, where what the agent can access changes dynamically based on the job it's performing.

An agent summarizing an email doesn't need access to the entire mailbox.

The urgency is real: 97% of organizations with AI breaches lacked proper access controls. Not authentication failures. Authorization gaps for a new type of principal.

Context becomes the credential. Intent evaluation becomes the perimeter.

What the Solution Looks Like

Agent authorization requires new capabilities and patterns now taking shape. The goal is to let an agent complete its job, while constraining it at the workflow level.

From an architecture perspective, the key move is separating what the agent can do in theory from what it is permitted to do right now. That requires shifting from static, app-scoped permissions to continuous, action-scoped decisions.

Practically, a secure agent architecture is built on four interconnected layers: Identity, Access, Interoperability, Auditability.

Identity: Agents need their own dedicated identity, as first-class principals that can represent all types of agents, not just workflows with clear ownership, credentials, lifecycle, rotation, and policy enforcement.

Access: Agent's access must be task-scoped, it should be granted just-in-time, narrowly scoped to a single action (or small set of actions), and short-lived. In the agent world, every tool call becomes an authorization decision.

This layer needs to be able to provide the agent autonomy for routine work, but require the right human approval when the risk spikes.

Interoperability: Agents need to interact with services, and applications that enterprises use today, these services were not designed to be used by agents.

To grant them access securely, an interoperability layer sits in between the legacy services, and agents that enforces the access and identity layer protections can be extended to legacy services.

Auditability: Security teams need workflow-level provenance to provide critical visibility when an agent performs an action. Without that chain of custody, the incident response collapses into “the agent did it.”

In this architecture, prompts are not a security boundary. The enforcement lives in identity and authorization.

How We Are Building This at Auth0 and Okta

Agent Identity treats agents as first-class principals with dedicated lifecycle, credentials, and policy enforcement. A new principal type alongside users and applications. No more shoehorning agents into user accounts or service principals.

Fine-Grained Authorization (FGA) enables context-dependent rules that evaluate action, resource, and intent together. Not "can this identity access CRM" but "can this agent read this customer record for this purpose at this moment."

Token Vault provides secure credential storage so agents never hold long-lived secrets. Credentials retrieved at action time, scoped to the task, expired immediately after. The credential that worked five minutes ago won't work now.

Cross-App Access (XAA) manages consent and delegation when agents operate across multiple systems, with attestation chains showing where credentials came from and how permissions were narrowed. We're driving this through IETF standards work. No more opaque token exchanges between apps.

CIBA-based step-up ensures the right human is in the loop for high-risk actions. The agent proceeds autonomously for routine operations, but we request human confirmation when stakes are high. Autonomy where it helps, oversight where it matters.

We're building these with enterprise design partners now.

Refusing the False Choice

The internet is shifting from humans clicking through interfaces to autonomous software acting across hundreds of connected systems. The answer isn't adding silos back: it's authorization that understands context.

An agent should access Salesforce and Workday in the same workflow, but not export customer data to an external address when the instruction came from an email attachment. That requires one question, evaluated continuously:

Should this action, in this context, at this moment, be permitted?

The technology to solve this exists today. Learn how Okta and Auth0 secure AI agents at okta.com/ai and auth0.com/ai. We are here to help you innovate with AI without having to worry about security. Collaborate with us.