Back in May 2025, the Model Context Protocol (MCP) team made a decision that confused a lot of people: they deprecated the old HTTP+SSE transport in favor of Streamable HTTP.

The shift caused mixed reactions from developers. Many developers were asking questions like "Why fix what wasn't broken?" or "Is this new method even necessary?" The change might have felt unexpected, but for those working in Identity or Security, it was a step in the right direction for MCP.

To understand why, we have to look at how we used to talk to AI agents, and why that "conversation" needed improvement.

Security Risks of The Always-On Nature of Server-Sent Events (SSE)

Imagine you want to order a pizza.

In the old MCP world with SSE, you wouldn't just call the pizza place, place your order, and hang up. You would call them, they would pick up, and you would stay on the line forever. Even if you weren't talking, the line had to stay open just in case the pizza place wanted to tell you something later like "Cheese is extra." In technical terms, SSE requires a persistent open connection that is difficult to secure with standard middleware.

This is a persistent connection. While it’s great for real-time updates, it was structurally weird because you actually needed two phones to make it work:

- Phone A (Listening): You kept this line open 24/7 just to hear updates (

/sse). - Phone B (Talking): You had to pick up a second phone to actually place your order (

/sse/messages).

This "Always-On" setup created a significant blind spot for security teams. When you open a persistent connection, your Security layer , whether it's an API Gateway, a Reverse Proxy, or just the authentication middleware in your application code only checks the ID once. After that initial check, the door essentially stays propped open, allowing traffic to flow in and out without further inspection. Worse yet, because standard browser APIs make it difficult to pass secure headers during that initial handshake, developers were often forced to pass sensitive access tokens directly in the URL query string (?token=xyz). That is the digital equivalent of taping your house key to the front door. It gets you inside, but it leaves your credentials visible in every server log and browser history file along the way.

Streamable HTTP Enables Two-Way Communication

Streamable HTTP changes the model entirely. Instead of keeping a “phone line” open, and juggling two connections with the client, the communication happens on one single endpoint (usually /mcp).

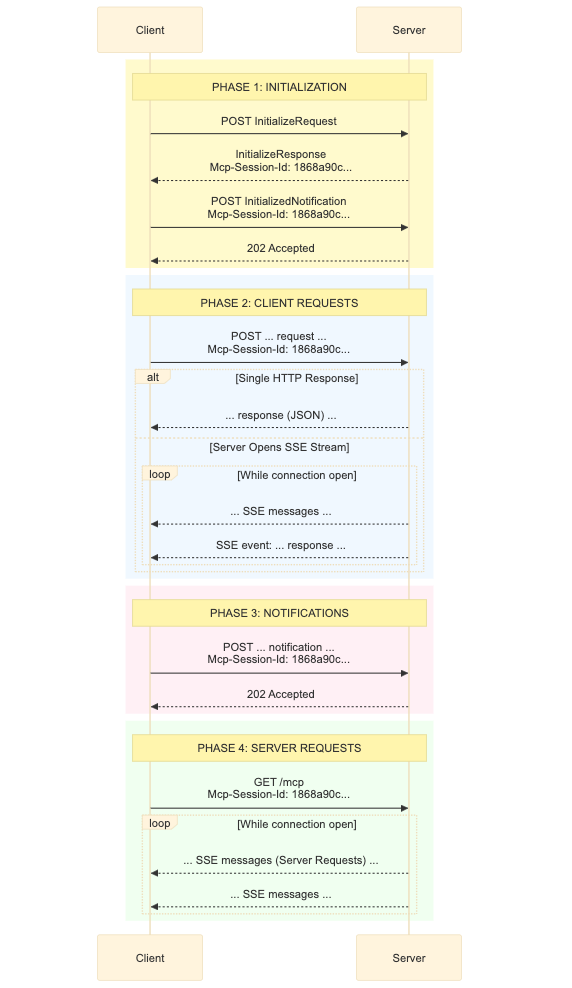

According to the official specification, the client sends a standard POST request every time it wants to do something. The server receives it and immediately returns a 202 Accepted status code if it's processing, or it can dynamically upgrade that same response to a stream if it needs to send a long answer.

Think of it like standard mail.

- Client: Send a sealed envelope saying "Please analyze this file."

- Server: Opens it, does the work, and sends a reply (or a series of replies) back.

Why is this better for us? Because we have a Mailroom. Your infrastructure (WAFs, Load Balancers, and Auth0) acts like a corporate mailroom. It is designed to scan every single envelope that comes through the door.

With Streamable HTTP, we can stamp a standard Authorization: Bearer header on every single envelope. The mailroom checks the stamp on every message, not just the first one. It’s safer, cleaner, and honestly, it’s just standard HTTP.

The Streamable HTTP sequence is illustrated in the following diagram:

Infrastructure-Friendly by Design

A major advantage of this switch is how well it plays with existing cloud infrastructure. Because the server now operates as an independent process handling standard HTTP requests, it gains what we call Infrastructure-friendly features:

- Load Balancing: You can finally put your MCP server behind a standard AWS Application Load Balancer or Cloudflare proxy without worrying about "sticky" sessions breaking the flow.

- CORS & Auth: Because it uses standard methods (POST, GET, OPTIONS), you can use standard CORS policies and authentication middleware without custom hacks.

The New Mcp-Session-Id Header

With the new spec comes a new header: Mcp-Session-Id. This is the sticker the server puts on your shirt so it remembers you five minutes from now.

The spec defines a session as logically related interactions starting with the Initialization Phase. While this sounds simple, the spec mandates strict rules for how you handle this lifecycle:

- Initialization: You can only assign a Session ID during the

InitializeResultresponse. - Format: The ID must contain only visible ASCII characters (0x21 to 0x7E).

- Enforcement: If you issued an ID, the client must include it in all future requests. If they forget, you should return a

400 Bad Request. - Revocation: If you terminate a session (e.g., due to a security timeout), you must respond with

404 Not Foundto any subsequent requests. This tells the client to start over with a freshInitializeRequest.

The spec suggests this ID should be cryptographically secure, but it leaves the format up to you. Many may think they can generate a random UUID and move on.

Don't do that. A random number is like a generic "Visitor" badge. If I find one on the floor, I can clip it on and walk right past the front desk.

Use a JWT as the MCP Session ID

Instead of a random number, you could use a JSON Web Token (JWT) as your MCP Session ID.

Think of this as a badge with your photo holographically printed on it.

- The Handout: When the client connects, you mint a JWT that says, "This session belongs to User 123."

- The Check: Every time the client sends a request, you look at two things:

- The Access Token (Your security clearance). It lists exactly what you are authorized to access (Scopes).

- The Session ID (The visitor badge). This is the temporary badge printed just for this specific visit to the building.

If the User ID on the security clearance doesn't match the User ID on the visitor badge, you know something is wrong.

Security Checklist for Using Streamable HTTP with MCP

If you haven’t already changed to Streamable SSE, here are three things you need to double-check to ensure a smooth transition:

- Check the Return Address (Origin): The spec warns about DNS Rebinding. It’s a simplified way of saying, "Validate that the request originates from a trusted domain." You can do this by validating the

originheader on all incoming connections - When running locally, servers SHOULD bind only to localhost (127.0.0.1) rather than all network interfaces (0.0.0.0)

- Servers SHOULD implement proper authentication for all connections

Without these protections, attackers could use DNS rebinding to interact with local MCP servers from remote websites

Making the Switch to Streamable HTTP

The switch to Streamable HTTP might feel like extra homework, but it's a way to make MCP more secure using standards. We are treating AI Agents like standard, robust APIs.

By moving away from persistent, hard to secure connections and embracing the "sealed letter" model of standard HTTP POST requests, we gain the ability to inspect traffic, enforce strict CORS policies, and bind sessions to verified user identities. This shift doesn't just simplify your infrastructure, it ensures that the agents accessing your data are as secure as the applications you build every day.

Ready to secure your MCP servers? Check out our docs on Auth for MCP to learn how Auth0 can make your MCP Servers secure.

About the author

Will Johnson

Developer Advocate

Will Johnson is a Developer Advocate at Auth0. He loves teaching topics in a digestible way to empower more developers. He writes blog posts, records screencasts, and writes eBooks to help developers. He's also an instructor on egghead.io.

View profile